When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

This decision looks like it’s a strategic move to balance performance and cost efficiency.

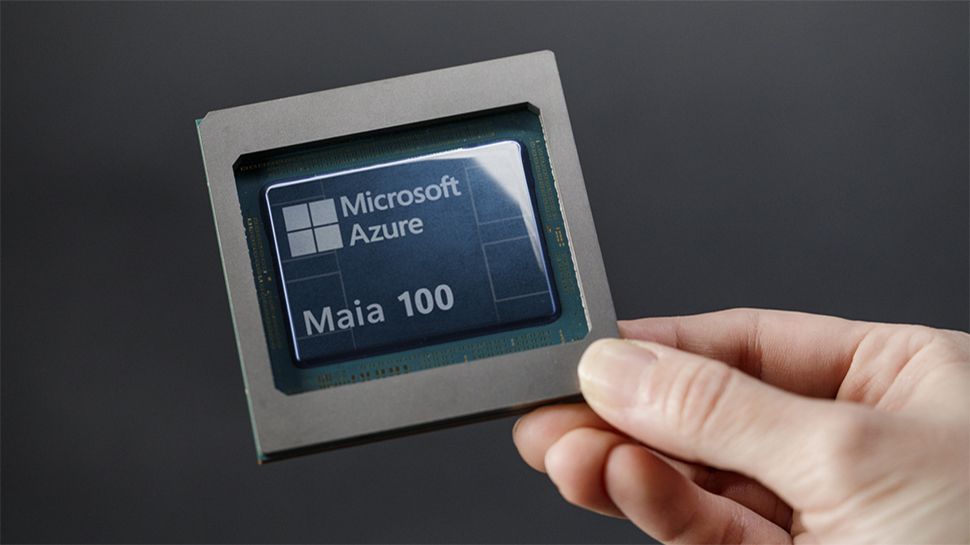

The Maia 100 accelerator is a reticle-size SoC, built on TSMCs N5 process and featuring a COWOS-S interposer.

It includes four HBM2E memory dies, delivering 1.8TBps bandwidth and 64GB capacity, tailored for high-throughput AI workloads.

One would expect it to be not as capable as aNvidiaH100 since it has less HBM capacity.

At the same time, it is using a good amount of power.

This enables developers to optimize workload performance across different hardware backends without sacrificing efficiency.